Descobrir como extrair uma tabela HTML com Python

Andrei Ogiolan on Apr 11 2023

Introdução

Web scraping is a powerful tool that allows you to extract data from websites and use it for a variety of purposes, such as data mining, data analysis, and machine learning. One common task in web scraping is extracting data from HTML tables, which can be found on a variety of websites and are used to present data in a structured, tabular format. In this article, we will learn how to use Python to scrape data from HTML tables and store it in a format that is easy to work with and analyze.

By the end of this article, you will have the skills and knowledge to build your own web scraper that can extract data from HTML tables and use it for a variety of purposes. Whether you are a data scientist looking to gather data for your next project, a business owner looking to gather data for market research, or a developer looking to build your own web scraping tool, this article will provide a valuable resource for getting started with HTML table scraping using Python.

O que são tabelas HTML?

HTML tables are a type of element in HTML (Hypertext Markup Language) that is used to represent tabular data on a web page. An HTML table consists of rows and columns of cells, which can contain text, images, or other HTML elements. HTML tables are created using the table element, and are structured using the ‘<tr>’ (table row) ,‘<td>’ (table cell), ‘<th>’ (table header), ‘<caption>’ , ‘<col>’, ‘<colgroup>’, ‘<tbody>’ (table body), ‘<thead>’ (table head) and ‘<tfoot>’ (table foot) elements. Now let’s go through each one and get in more detail:

- elemento table: Define o início e o fim de uma tabela HTML.

- elemento tr (linha de tabela): Define uma linha numa tabela HTML.

- Elemento td (célula de tabela): Define uma célula numa tabela HTML.

- th (table header) element: Defines a header cell in an HTML table. Header cells are displayed in bold and centered by default, and are used to label the rows or columns of the table.

- elemento caption: Define uma legenda ou título para uma tabela HTML. A legenda é normalmente apresentada por cima ou por baixo da tabela.

- elementos col e colgroup: Definem as propriedades das colunas numa tabela HTML, como a largura ou o alinhamento.

- Elementos tbody, thead e tfoot: Definem as secções de corpo, cabeça e pé de uma tabela HTML, respetivamente. Estes elementos podem ser utilizados para agrupar linhas e aplicar estilos ou atributos a uma secção específica da tabela.

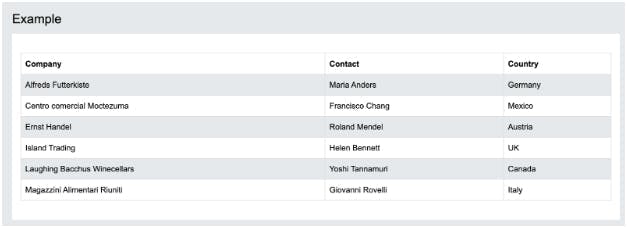

For a better understanding of this concept, let’s see how a HTML table look like:

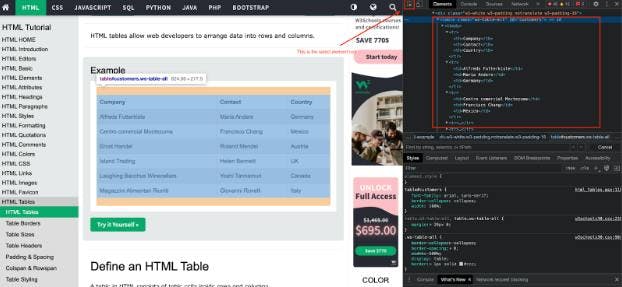

On the first look it seems like a normal table and we can not see structure with the above described elements. It does not mean they are not present, it means the browser already parsed that for us. In order to be able to see the HTML structure, you need to go one step deeper and use dev tools. You can do that by right-clicking on the page, click on inspect, click on select element tool and click on the element ( table in this case ) you want to see the HTML structure for. After following this steps, you should see something like this:

As tabelas HTML são normalmente utilizadas para apresentar dados num formato estruturado e tabular, por exemplo, para tabular resultados ou apresentar o conteúdo de uma base de dados. Podem ser encontradas numa grande variedade de sítios Web e são um elemento importante a ter em conta ao extrair dados da Web.

Configuração

Before we can start scraping data from HTML tables, we need to set up our environment and make sure that we have all of the necessary tools and libraries installed. The first step is to make sure that you have Python installed on your computer. If you do not have Python installed, you can download it from the official Python website (https://www.python.org/) and follow the instructions to install it.

Next, we will need to install some libraries that will help us scrape data from HTML tables. Some of the most popular libraries for web scraping in Python are Beautiful Soup, Selenium and Scrapy. In this article the focus will be on using Beautiful Soup, since it is very straightforward compared to the other ones. Beautiful Soup is a library that makes it easy to parse HTML and XML documents, and is particularly useful for extracting data from web pages. While this is enough to scrape the HTML data we are looking for, it is not going to be too readable for the human eye in the HTML format, so you may want to parse the data somehow. This is the moment where Pandas library comes into play.

Pandas is a data analysis library that provides tools for working with structured data, such as HTML tables. You can install these libraries using the pip package manager, which is included with Python:

$ pip install beautifulsoup4 pandas

Once you have Python and the necessary libraries installed, you are ready to start scraping data from HTML tables. In the next section, we will walk through the steps of building a web scraper that can extract data from an HTML table and store it in a structured format.

Vamos começar a raspar

Now that we have our environment set up and have a basic understanding of HTML tables, we can start building a web scraper to extract data from an HTML table. In this section, we will walk through the steps of building a simple scraper that can extract data from a table and store it in a structured format.

The first step is to use the requests library to send an HTTP request to the webpage that contains the HTML table that we want to scrape.

You can install it using pip, as any other Python package:

$ pip install requests

This library allows us to retrieve the HTML content of a web page as a string:

import requests

url = 'https://www.w3schools.com/html/html_tables.asp'

html = requests.get(url).text

Next, we will use the BeautifulSoup library to parse the HTML content and extract the data from the table. BeautifulSoup provides a variety of methods and attributes that make it easy to navigate and extract data from an HTML document. Here is an example of how to use it to find the table element and extract the data from the cells:

soup = BeautifulSoup(html, 'html.parser')

# Find the table element

table = soup.find('table')

# Extract the data from the cells

data = []

for row in table.find_all('tr'):

cols = row.find_all('td')

# Extracting the table headers

if len(cols) == 0:

cols = row.find_all('th')

cols = [ele.text.strip() for ele in cols]

data.append([ele for ele in cols if ele]) # Get rid of empty values

print(data)

The 2D data array is now filled with table rows and columns values. In order for it to be more readable to us we can pass the content to a Pandas Dataframe very easily now:

import pandas as pd

# Getting the headers from the data array

# It is important to remove them from the data array afterwards in order to be parsed correctly by Pandas

headers = data.pop(0)

df = pd.DataFrame(data, columns=headers)

print(df)

Once you have extracted the data from the table, you can use it for a variety of purposes, such as data analysis, machine learning, or storing it in a database. You can also modify the code to scrape multiple tables from the same web page or from multiple web pages.

Please keep in mind that not all the websites on the internet are this easy to scrape data from. Many of them implemented high level protection measures designed to prevent scraping such as CAPTCHA and blocking the IP addresses, but luckily there are 3rd party services such as WebScrapingAPI which offer IP Rotation and CAPTCHA bypass enabling you to scrape those targets.

I hope this section provided a helpful overview of the process of scraping data from an HTML table using Python. In the next section, we will discuss some of the ways you can improve this process and best web scraping practices.

Getting more advanced

While the scraper we built in the previous section is functional and can extract data from an HTML table, there are a number of ways we can improve and optimize it to make it more efficient and effective. Here are a few suggestions:

- Handling pagination: If the HTML table you are scraping is spread across multiple pages, you will need to modify the scraper to handle pagination and scrape data from all of the pages. This can typically be done by following links or using a pagination control, such as a "next" button, to navigate to the next page of data.

- Handling AJAX: If the HTML table is generated using AJAX or JavaScript, you may need to use a tool like Selenium to execute the JavaScript and load the data into the table. Selenium is a web testing library that can simulate a user interacting with a web page and allow you to scrape data that is dynamically generated. A good alternative to that is to use our scraper which can return the data after JavaScript is rendered on the page. You can learn more about this by checking our docs.

- Handling errors: It is important to handle errors and exceptions gracefully in your scraper, as network or server issues can cause requests to fail or data to be incomplete. You can use try/except blocks to catch exceptions and handle them appropriately, such as retrying the request or logging the error.

- Scaling the scraper: If you need to scrape a large amount of data from multiple tables or websites, you may need to scale your scraper to handle the increased workload. This can be done using techniques such as parallel processing or distributing the work across multiple machines.

By improving and optimizing your web scraper, you can extract data more efficiently and effectively, and ensure that your scraper is reliable and scalable. In the next section, we will discuss why using a professional scraper service may be a better option than building your own scraper.

Resumo

In this article, we covered the basics of web scraping and showed you how to build a simple Python scraper to extract data from an HTML table. While building your own scraper can be a useful and educational exercise, there are a number of reasons why using a professional scraper service may be a better option in many cases:

- Professional scrapers are typically more reliable and efficient, as they are designed and optimized for web scraping at scale.

- Professional scrapers often have features and capabilities that are not available in homemade scrapers, such as support for CAPTCHAs, rate limiting, and handling AJAX and JavaScript.

- Using a professional scraper can save you time and resources, as you don't have to build and maintain your own scraper.

- Professional scrapers often offer various pricing options and can be more cost-effective than building your own scraper, especially if you need to scrape large amounts of data.

While building your own scraper can be a rewarding experience, in many cases it may be more practical and cost-effective to use a professional scraper service. In the end, the decision of whether to build your own scraper or use a professional service will depend on your specific needs and resources.

I hope this article provided a helpful overview of web scraping and the process of building a simple HTML table scraper with Python.

Notícias e actualizações

Mantenha-se atualizado com os mais recentes guias e notícias sobre raspagem da Web, subscrevendo a nossa newsletter.

Preocupamo-nos com a proteção dos seus dados. Leia a nossa Política de Privacidade.

Artigos relacionados

Explore as complexidades da extração de dados de produtos da Amazon com nosso guia detalhado. De práticas recomendadas e ferramentas como a API Amazon Scraper a considerações legais, saiba como enfrentar desafios, contornar CAPTCHAs e extrair insights valiosos com eficiência.

Descubra 3 formas de descarregar ficheiros com o Puppeteer e construa um web scraper que faz exatamente isso.

Aprenda a extrair tabelas JavaScript usando Python. Extrair dados de sites, armazená-los e manipulá-los usando Pandas. Melhorar a eficiência e a fiabilidade do processo de scraping.